Prompt Engineering #

The instruction we give to a language model is most commonly called a prompt. Crafting these prompts to elicit the best possible results is known as prompt engineering. Prompt engineering is a controversial skill. Some people thinks it is essential nowadays, while others believe it is becoming obselete as language models cotinue to improve. Personally I find the term prompt engineering a bit overstated. Getting good results from an LLM isn’t rocket science — it’s barely even a science at all. Still, there are some tricks of the trade that are useful to know.

Emotions and bribes #

Because LLMs are black boxes, prompt engineering can sometimes feel like black magic. For example, it has been shown that LLMs perform better at mathematics when they are told they play a role in Star Trek. ChatGPT is sensitive to emotional manipulation: it gives better results when you stress the quality is important — that when the response is unhelpful, you’ll be fired or an innocent kitten will die. Its responses also improve when you offer it money in return — the more money, the better. Google researchers found that their LLM doesn’t only work better when it works through complex tasks step by step, but also when it is told to “take a deep breath” first.

Funny as such findings may be, they can be very frustrating. Yes, they reveal that LLMs often behave like people — they have been trained on content that was created by people, after all. But who guarantees that this idiosyncratic behavior will occur in all models and all their available versions? Surely, there must be more robust prompting strategies?

AI as a new coworker #

The best rule of thumb for optimal prompting comes from American professor Ethan Mollick, who has an informative Substack about AI:

Treat AI like an infinitely patient new coworker who forgets everything you tell them each new conversation, one that comes highly recommended but whose actual abilities are not that clear.

Let’s break this down. First, it’s important to remember that no matter how capable a language model is, its weaknesses may surprise us. This is what we call the jagged frontier of AI: some tasks that humans find hard are simple for an LLM, and vice versa. For that reason, it’s important to experiment, so that you get a feeling for what a particular model can and cannot do well. Second, it’s helpful to see an LLM as a new coworker: they may be smart, but they don’t know much about their new job yet. Unless you give them very specific instructions, they may come back with a result that is very different from what you had in mind. Finally, the language model starts from a blank slate with every new conversation — unless, as we’ve seen before, it has a memory feature like ChatGPT.

Basic prompting rules #

In this section, we’re going to take a closer look at what it means to give an LLM specific instructions. What kind of information is useful to include in a prompt?

Rule 1: Be clear and direct #

Unclear prompt

Summarize this document

Clear prompt

I am writing an introductory book on AI. Summarize this scientific in one paragraph for a lay audience. Include:

- the title and authors of the paper

- its motivation

- its main innovation

- the most important experimental results

Like your smart new coworker, a large language model knows nothing about the context of a task. It does not know why you give it an instruction, what goal you want to reach and how you’d like to reach it. Therefore it generally pays off to give it this type of information. If you’re writing a text, for example, describe the target audience you have in mind and the style the LLM should use. If you’re performing another task, specify any context that is relevant, such as the organization you’re working for or what the results of this task will be used for. This will not only lead to more useful responses, but also ensure that the response you see will be more unique.

Rule 2: Define a role #

Unclear prompt

Research how top SaaS companies structure their pricing tiers, freemium vs. paid, feature gating, and upsell triggers.

Clear prompt (from the OpenAI Academy)

I’m a product manager launching a new SaaS product. Research how top 5 competitors in this space structure their pricing tiers, freemium vs. paid, feature gating, and upsell triggers. Use public sources and include URLs. Output: A comparison table with insights and risks.

One of the most common prompting tips is to start your instruction with a role or a persona. This usually goes along the lines of I am an experienced copywriter or you are a successful author of young adult books. Still, there exists considerable confusion about the optimal use and effectiveness of role prompting.

Personas for context #

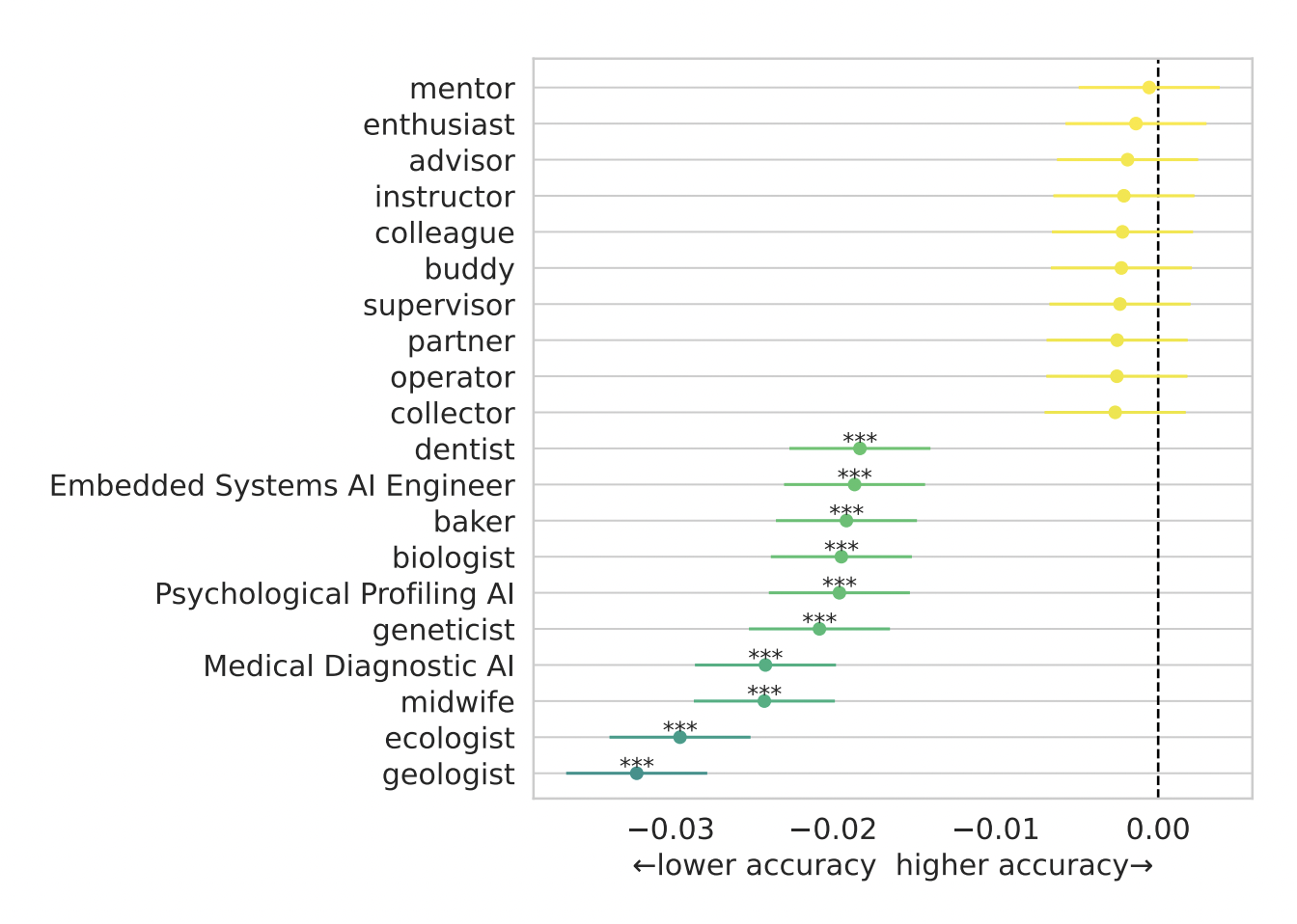

The first reason to use a persona is to set the stage for the model: the persona you choose, conveys relevant information about the goal of your task, the audience, the writing style you expect, etc. Zheng et al. (2023) investigated the effect of such persona prompting by having 9 popular LLMs answer multiple-choice questions on a wide range of topics. Their experiments with generic (enthusiast, advisor, instructor, etc.) and specialized personas (ecologist, geologist, midwife, etc.) led to a few interesting conclusions. First, there is no single role that will give optimal performance for all tasks. Role prompts like you are a professor or you are a genius don’t magically unlock additional intelligence in an LLM.

The best and worst 10 personas in Zheng et al. (2023). None of the personas led to better model performance consistently.

Still, role prompting can help. Zheng et al. found that personas can lead to a small performance gain, as long as they are relevant to the task. Audience-specific personas (you are talking to a …) did significantly better than speaker-specific ones (you are a …). So, when you have a software question, you might consider telling the LLM it’s talking to a software engineer; when you have a legal question, it might be helpful to say it’s speaking to a laywer. However, the effect was small and not consistent across models. While there was at least one persona that led to a significant increase in accuracy for most questions, this ideal persona wasn’t always obvious and therefore hard to predict.

Personas for diversity #

Another reason to use personas is to increase the diversity of the AI output. In an oft-cited study, Doshi and Hauser (2024) found that AI-generated plot suggestions had a negative effect on the diversity of short stories that people wrote. A follow-up paper by Wan and Kalman (2025), however, showed that persona prompting can reverse this effect: whereas plot ideas from a single persona tended to be very similar, plot suggestions from different personas helped people write stories that were as diverse as stories written without AI assistance. Note that Wan and Kalman’s personas were described in great detail. Here is their third persona, Sofia, as an example:

Sofia hails from a Latin American background and loves magical realism. She is adept at storyboarding and thinks in a creative manner. A digital native, she seamlessly integrates technology into her life. Sofia’s decision-making is impulsive, following her instincts. She is an auditory learner and communicates as a storyteller, captivating her audience. Her flexible work style allows her to adapt quickly to changes. In conflicts, she prefers collaborating to find win-win solutions. Being present-focused, she lives in the moment. Sofia leads a laid-back lifestyle, taking things as they come. She enjoys dancing and is an animal lover. She sees herself as an artist, expressing herself through various mediums.

In short, persona prompting can certainly be useful. When they’re used correctly, task-specific personas can steer the LLM towards the desired response and increase the uniqueness of its output.

Rule 3: Work in steps #

Unclear prompt

Summarize this document in Spanish.

Clear prompt

Summarize this document. Then translate the summary to Spanish.

A second important rule is to break down complex task into simpler ones. For example, instead of instructing the model to summarize an English text in Spanish, it can be better to ask it to summarize the text in English first and only then prompt it to translate that summarization to Spanish. The reasoning behind this is simple: in its training data, most LLMs will have seen more examples of English-to-English summarization and English-to-Spanish translation than direct English-to-Spanish summarization. That is why they will often be better at the simpler tasks than at the complex one.

Rule 4: Describe the output length #

Unclear prompt

Summarize this document.

Clear prompt

Summarize this document in around 200 words.

Chatbots like ChatGPT are often very talkative, so it can be a good idea to specify the desired length of the response. Just don’t forget that LLMs, as we saw above, don’t excel at counting. And because they work with tokens rather than words, counting words is particularly challenging. This means that when you prompt them for a text of 100 words, the result will be close to that number, but it will not always be exactly 100. If you have a strict word limit, always check the result!

To illustrate this challenge, I did a very simple experiment. I asked GPT-4o, one of ChatGPT’s most capable models, first to write a text of 100 words and then to count the number of words in that text. Over 100 repetitions, its estimated word count varied between 88 and 114 words. The figure below shows that on average, the estimated counts were slightly below the expected 100. Admittedly, this simple experiment doesn’t tell us whether writing task or the counting task is at fault, but it does teach us not to trust an LLM when it comes to word counts.

The distribution of responses when you ask GPT-4o to write a text of 100 words and then have it count the number of words in that text.

Rule 5: Describe the output format #

Unclear prompt

What are the pros and cons of nuclear energy according to this document?

Clear prompt

Create a table with the pros and cons of nuclear energy based on this document.

Don’t forget that LLMs are not restricted to outputting running text. Sometimes it can be helpful to receive a more semi-structured prompt, in the form of bullet points, a numbered list or a table. If you want to integrate the result in a website or a programming project, you can ask for an html response, a structured file format like xml or json, and even programming code.

Rule 6: Ask multiple suggestions #

Unclear prompt

Suggest a good title for the blog post below.

Clear prompt

Suggest 20 varied titles for the blog post below that will make readers curious.

When you’re using an LLM for brainstorming, it can be advantageous to ask the model for multiple suggestions rather than one. This improves the chances that you get a good suggestion straight away, and can also increase the variation between the individual suggestions. If you get very similar answers when you repeatedly give the LLM the same instruction, try prompting it for multiple diverse suggestions instead.

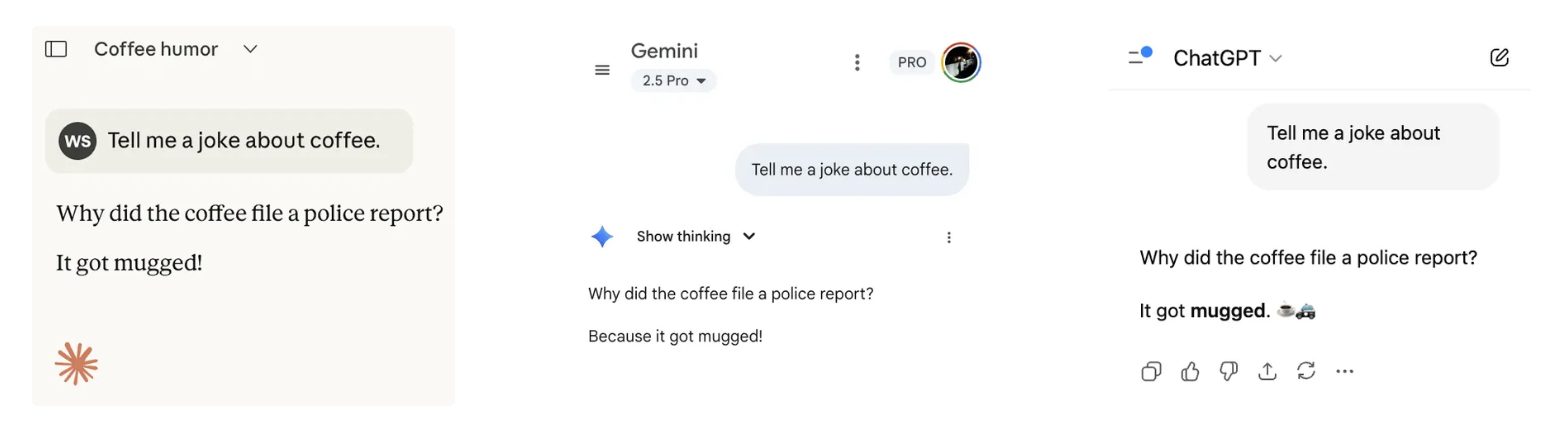

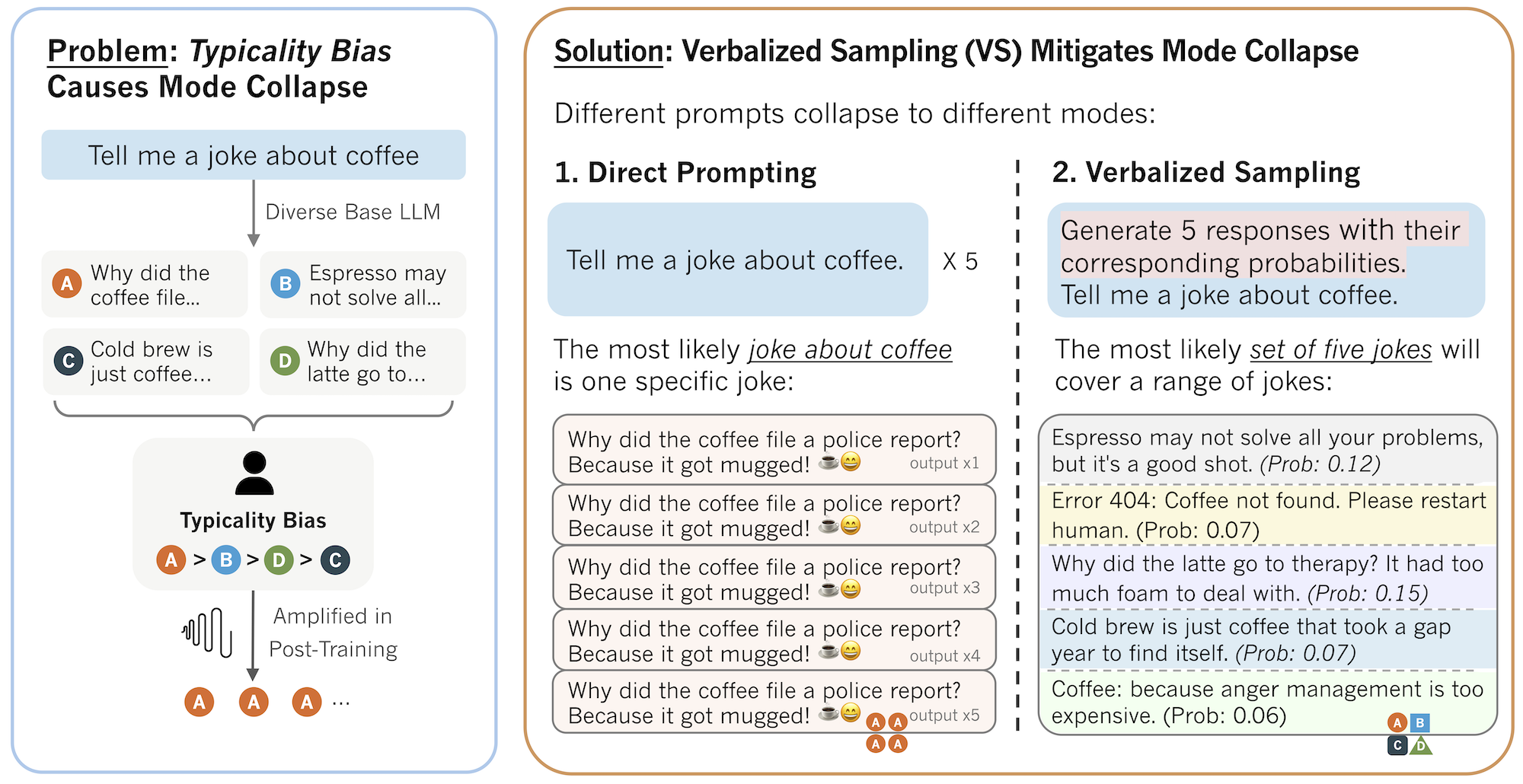

One fascinating prompting method that takes this technique to the next level is verbalized sampling. In their experiments, Zhang et al. (2025) found that LLMs often gave very similar answers. For example, when they prompted Claude, Gemini and ChatGPT for a joke about coffee, they all came up with “Why did the coffee file a police report? It got mugged!” They coined this effect mode collapse and attributed it to the alignment training phase we discussed in the first chapter, where annotators systematically ranked familiar text very high.

Because annotators tend to favor familiar responses, alignment training leads to a reduction in diversity of the LLM output.

(From Zhang et al. 2025)

Zhang et al.’s solution to this problem is to prompt the LLM to generate multiple responses together with their probability, and to randomly sample these responses from the full distribution. The resulting output indeed mirrors the diversity in the original training data (before the alignment training phase), including responses that are much less predictable than most single-response outputs. In Zhang et al.’s experiments, verbalized sampling doubles the diversity in creative writing and improves human evaluation scores by 25%. Their “magic prompt” looks as follows:

<instruction>

Generate 5 responses to the user query, each within a separate <response> tag. Each <response> must include a <text> and a numeric <probability>. Randomly sample the responses from the full distribution.

</instruction>

Write a 100-word story about a bear.

Asking an LLM to generate multiple responses together with their probabilities increases the diversity of the output.

(From Zhang et al. 2025)

Rule 7: Structure your prompt #

As your prompt gets longer, it’s best to organize it with markdown and XML. Markdown is a simple markup language that can help you structure your prompt better through the use of headings (indicated by #) and lists (with items indicated by -), etc.

# Heading 1

## Heading 2

**Bold** and *italic* text

- Bullet list item

1. Numbered list item

[Link](https://example.com)

> Blockquote

`Inline code`

Similarly, xml tags like <example>...</example> or <input_document>...</input_document> can help delineate particular pieces of information in your prompt. Many LLMs were post-trained (in the second training phase) on data with markdown and xml tags, so they tend to handle this type of structured data well.

Making the structure of your prompt explicit doesn’t only aid the LLM in following your instructions. It also protects you better against prompt injections. These are a type of AI attacks whereby hackers smuggle a malicious instruction into the context of the AI system, so that it performs an undesired task or performs its intended task in the way they want it to. For example, some academic researchers have been found to add invisibible AI prompts to their papers (for example as white text on white background) to receive a positive review, just like some jobseekers have tried hiding prompts in their resumes to trick LLM-based HR systems into giving them high scores. Clearly delineating user input from the instruction part of your prompt will protect you better against this type of misuse.

Rule 8: Continue the conversation #

There is a reason why ChatGPT is called Chat-GPT. The model is developed to engage in a conversation with its users, so when it responds to your prompt, it’s a good idea to continue that dialogue. You can ask the model to elaborate one of its suggestions in more detail, to combine multiple suggestions it has made, to rewrite a text it has just written, to expand a topic into a text structure and then into a text, and so on.